Testing CockroachDB, more on Resilience ...

TL;DR: I'm simulating the faillure of more than 1 node and find some weird behaviour ? I'm learning how to operate a cluster. Repair is not always completely automatic?

Spoiler: The cluster can remain Resilient, but you need to keep monitoring your cluster closely. There are some things I cannot quite explain, yet.

Do I need to RTFM more ? => Edit: Yes. See answer to question below.

Background: Distributed Databases are Resilient.

I'm experimenting with a distributed database, where every data-item is replicated 3x (replication factor of 3) over a 3 node cluster. Data remains "available" as long as every object has a quorum, in this case 2 votes out of 3. From previous experiments (link), I already know that an outage of 1 node is not a problem, but an outage of 2 nodes out of 3 is fatal. In previous experiment I showed how I could "fix" the loss of a single node by adding another, fourth, node to the cluster to bring the cluster back to 3-nodes and a resilient state.

I continued my exploration.

The history of my cluster is:

Start with a three node cluster, no warnings on the dashboard.

First node, named roach1, fails (killed via docker).... No impact on availability, but the two remaining nodes report "under replication" because every object now only has 2 copies available. But 2 out of 3 is still "quorum", hence data remains available and database remains functional.

Second node, named roach2, also fails (killed by docker)... Database stops functioning. By bringing 2nd node back up, database resumes operations. With only 2 nodes, the database is not Resilient anymore.

Adding node nr4, named roach4. Cluster now consists of 3 nodes again: roach2, roach3 and roach4. Dashboard shows under-replicated ranges.

The under-replications disappears after some time needed for re-balancing, and the cluster is again "resilient against failure of any one node".

Again, killing node2, named roach2: database remains working because the nodes 3 and 4 still have quorum. Since we now only have two nodes, all ranges are under-replicated.

Adding node nr5, named roach5. Cluster comes back to 3 nodes, and should re-balance to become resilient again... No??

This is where the anomaly starts...

I now have a 3 node cluster again, with nodes : roach3, roach4 and roach5. My replication factor (RF) is also 3. I would expect this system to balance out and become resilient again.

Instead: I remain stuck with some 61 under-replicated ranges. And any stop of roach3 or roach4 leads to a stop of the database. However, I can stop the 5th node, roach5, without impact on the running insert-process.

Meanwhile, the dashboard looked like this:

I was left with 61 under-replicated ranges, hence a non-resilient cluster.

I've let it run for over 12hrs (overnight), but the under-replication remains. Whereas when I removed + replaced the 1st node, roach1, the under-replications seemed to disappear after some 5-10 minutes (which I think is documented). But removing and replacing the 2nd node doesnt seem to lead to a re-balancing on the 3 remaining nodes: 1 old and 2 newly-added.

I was curious to know which ranges (and which objects) would be under-replicated and why ?

Inspection: Using crdb_internal to examine ranges..

First I queried the node-views in crdb_internal, to see how the ranges and leases where spread out:

And it seems the last-added node only received a small number of ranges and leases. That did not look like a well-balanced 3-node cluster to me.

I went ahead and queried them anyway, hence you will see the warnings on my screenprints.

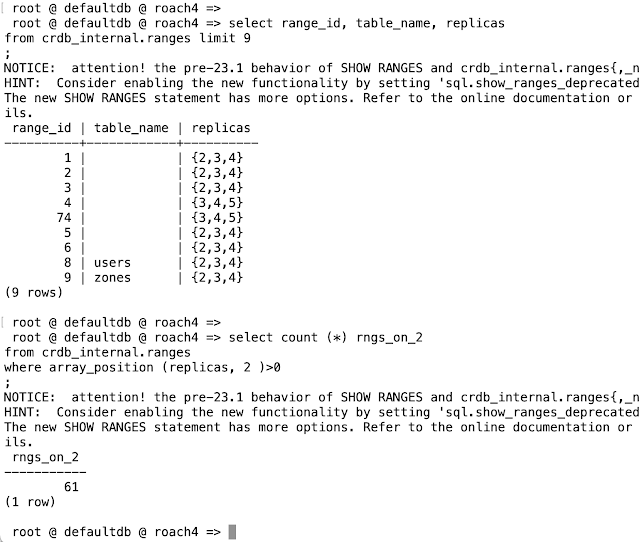

Quick inspection of the ranges:

Aha, some of the replicas are still on the 2nd (dead) node called roach2, possibly hoping that node will come back sometime. And the count of those is ... 61, exactly the nr of under-replicated ranges. It partly makes sense, any range that still has a replica on roach2 will loose quorum and become unavailable as soon as one of its remaining 2 ranges becomes unavailable.

On further inspection, it also appears those ranges only served "catalog" objects. The (very few) user-objects I had created in this database were already moved to the 3 remaining healthy nodes:

The few ranges that no longer replicated to node_id =2 were my self-created tables and sequence, and two ranges serving unknowns (with table_id = 0 ).

But why do the other 61 ranges not get moved to live nodes? (that is a Question for cockroach, see below).

The Fix: decommission the node.

After a bit help from the Slack-Support from CRDB, and some reading of RTFM, I found out that the solution was to use the "decommission" command (link) to remove the now-dead nodes roach1 and roach2 from the cluster.

Immediately after the running decommission, the cluster looked healthy:

No more under-replication in the dashboard.

After decommission of node 2, the ranges got a much better spread over the nodes, Here is the Before and After query of the ranges and leases per node:

Note that the range-count is now higher, some 61 range-replicas were re-deployed on the new node, roach5, leading to a more balanced look. The leases take a little longer to spread out.

Remains the question: why cant those ranges move all by themselves? My user-objects had moved nicely, why not also all the catalog objects?

Lessons so far:

Watch out for Under Replication: After "Node-Out Events", Always Check your dashboard, and wait (or help) until the under-replicated ranges have disappeared.

If needed: use "decommission" to force range-replicas to move off the dead nodes and to re-obtain Quorum on live nodes. There is also a "drain" command to move data off a node before stopping it. I might look into this a bit further in future tests.

What I find important to note: Up to now, All Nodes appear to be Equal, Symmetrical. There are no so called Master-nodes that need additional attention. Although the need for decommission makes me hesitate here ?

Easy (re)move and migration of nodes: Furthermore, I think I now know how to remove all the original, starting, nodes of a cluster. This can be useful in case of upgrades (of hardware or cloud) or migration or move to some other location or to another cloud or datacentre or hosting provider.

--------- End of Blogpost --- some Questions and Remarks below -------

Q: How come the ranges of the first dead node got re-copied onto the remaining live-nodes, but the ranges of the 2nd dead-node had to wait for decommissioning ? And is it a coincidence that those ranges are used for "catalog" data ?

Q: Will/can nodes be automatically de-commissioned after a certain period of being down or not-alive ? Is that configurable ? Or would you recommend to script that?

---- > Edit: Answer from Shaun @ CRDB, via Slack :

Even with 2 dead nodes, it became a 5-node cluster when adding roach5. From that point on, the system-ranges (which store the catalog), will have a preference to become RF=5. Hence the system-ranges wanted to stay on 2nd node, aiming for RF=5 as soon as possible. It was only on decommissioning node 2 that the system-ranges "lost that preference" and went back to RF=3.

Note to self: This is definitely a hint to better RTFM.

/End Edit < ----

Suggestion: create views to show the relations between object-range-node.

I would suggest to use conventional relations, rather than store sub-relations in array-fields. Relations (tables or views) are easier and more transparant to combine with other info than arrays.

E.g. the relation (table:range), which will probably be a n:m relationship in the near future. And (range:node), which will also be an n:m relationship (unless RF=1 in some cases).

I have already created my own primitive view to link objects (tables, indexes, sequences, mviews) to ranges and thus to nodes. Because there are some array-fields in the range-views, I could use (array-) unnest to get better details.

-- Note: only works with deprecated behaviour !

create view crx_vrangereps as (

select range_id

, unnest (voting_replicas ) node_id

, table_id, lease_holder

, case table_name when '' then '-unknown-' else table_name end tn

--, r.*

from crdb_internal.ranges r ) ;

This view allows me to easily find the ranges (and thus some of the tables) that depended on nodes 3 or 4: Those ranges would have <3 replicas (e.g. under-replicated) and have some replicas residing on nodes 3 or 4.

In general, such a view will help find unsafe-objects, or determine the impact of node-down events for a given node_id. I would probably recommend two such views:

One view to link tables to ranges (n:m): tables can have 1 or more ranges, and some tables may share the same range(s) for efficiency-reasons or other considerations.

Plus a view to link ranges to nodes (1:3, or 1:RF ). I think the "catalog ranges" are already stored with RF=5.

-------------- Real end of blogpost --------------

Comments

Post a Comment