Checking Cockroach : Distributed, Replicated, Sharded.

TL;DR: Some simple testing of data in a table to see how it is "replicated" and "sharded". Spoiler: cockroachDB replicates from the start, but it only shards (splits) when necessary. Hence on default settings you need to add about 512M to a table before any sharding (splitting) occurs. It seems to work Fine!

Background: Distributed Databases, Replication and Sharding.

The two different aspects covered by a Distributed Database are 1) Replication and 2) Distribution/Sharding.

Replication: Offering resilience against outage (storage-, server- or network-faillure), and Sharding. Replication means there are multiple copies of the data in different storage-areas, or even in different physical locations.

Sharding: Offering scalability to more-users and/or parallel processing of large sets. By having data in more manageable chunks (shards, partitions), it can be handled by multiple processes in parallel. Distribution or Sharding (can) offer both higher multi-user capacity and/or faster processing of large sets by enabling better parallel processing.

I want to investigate how cockroach implements those concepts. To that end, I have learned to set op a test/playground cluster of CRDB nodes (link). I have also learned to query some of the internals to find information about tables, about "ranges" (shards) and about "replicas".

Now I want to find out, to Verify if you like: "Where is my data".

And probably later: "What happens if components start to fail..."

Research: Small cluster, Insert Data, and see what happens.

Plan is to build a small cluster of 5 nodes, and to Insert data into just 1 table. If I add data to the table, I expect to see that data going (growing) into 3 places. Because of the default replication-factor of 3, at some point I hope to spot the growing disk-usage on 3 of my nodes. I also want to "find the info" in the internals of cockroach, perferably verify with SQL.

So questions to the system are, Can it tell me or show me:

1) Where, on what nodes, my data is stored, replicated and sharded.

And reversing that:

2) What the effect would be of losing 1 or more nodes on the availability of my data.

With some luck, I also get to observe a "split", which would be the creation of an additional range (shard) for that table as it is growing.

To this end, I built a cluster with just 5 nodes, and I prepared a set of about 1M records, total size of 1G, to insert into a table t. While inserting, I wont use a sequence, so as to not cause updates to any sequence-store (which may or may not be on the same nodes).

I have also learned to query the internal views/tables, although some of those seem to generate an error. To avoid the error I can use a setting, but that setting renders the views less-informative (Question for CR at the bottom of this blog...)

Empty cluster, starting the load...

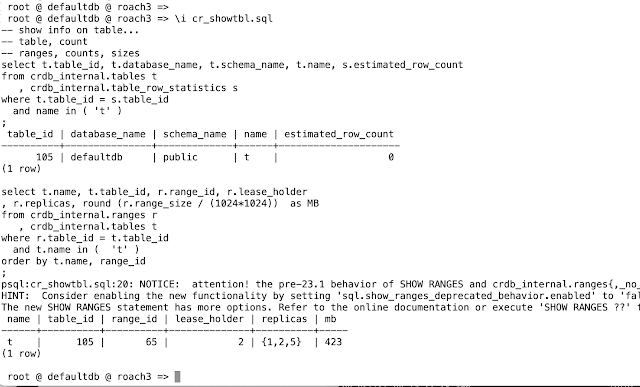

This is what it looks like when the cluster is just created: 5 nodes, and an empty table t. First query shows the nodes, then we count the contents of t (0 records), and we show some of the internals:

The list of nodes in the cluster: node_id 1...5, with nodename and sql-address.

The count of table t: zero records.

The crdb-internal info of table t: table_id=105, located in the dflt-db, and estimated at .. zero rows.

Notice the replica-info on the range: Table t uses range_id 65, and that range seems to have lease-holder=2 and replicas=(1,2, 5) and at the moment 0 Mb in size. From this info, we are expecting the data to go to nodes with node_id in the list (1, 2, 5).

Notice the fact that node_id=2 seems to correspond to nodename (docker-container name) of roach4 as is visible in the first query where node_id and sql-address are listed. Keep this in mind: node_id=2 is nodename=roach4.

The commands to show the contents, wc-wordcount, of the datafile, and the loading are also included on the screenshot: a million lines of data, and the backslash-copy command to start loading it.

Regarding the "NOTICE" when we queried the crdb_internal.ranges, see bottom of this post.

The disk-usage on the "empty" nodes is fetched by a shell-script looping over my containers:

Disk usage on this freshly created cluster is 1323M on the first node, and around 1112M on the other, later-joined, nodes. Time between creation of the nodes(contaniers) was less than 20sec. It seems the first node contains a little more data from the start onwards.

We then let run the copy command to fill table t from the file with a million records and we sit back and observe. We notice that the count of records in table t doesnt increase: The loading is handled as 1 transaction but the size of the "range" for the table starts to grow:

And about 15min in the load we also see that the disk-usage on nodes is increasing on 3 of the 5 nodes:

The disk-usage of roach1, roach4 and roach5 is visibly increasing, faster than the other two. and if we look back at the node_id and node-addresses, we see that those nodenames correspond to node_ids: 1, 2 and 5: The numbers listed as "replicas" for the range of table t.

Note that the increased disk-usage values dont seem to add up to the listed 423Mb from the range, there may already be some compression going on. Something to check later or ask CR about.

But I think I now have proof of "replication": the data for this table seems to spread over 3 nodes, which corresponds to the documented Replication-Factor of 3.

Halfway into loading, the Split!

With some patience, when the size of the range reaches 512M, suddenly the number of ranges increases: The first split has occurred, table t now occupied two ranges (two shards):

Notice that after this split, according to the "replicas", the data still resides on the same nodes, which was sort-of confirmed by the disk-usage: the two "idle" nodes are not reporting higher disk-usage yet.

But the lease-holders (+/- leaders) for each range are different. My guess is the data is kept in place, partly to reduce the initial workload generated by a split: moving part of the data immediately to other nodes would add to the total load, but not bring much advantage yet.

While I was pondering this behaviour, I "blinked my eyes", and the loading was suddenly completed...

Finally, 1 M records loaded.

(Remember, this cluster running in docker on a 10 year old macbook i7, with 16G memory- link).

When loading had finished, the information on table t suddenly looked like this:

(screen crtst06: suddenly 10 ranges/shards)

Notice the table now has an "estimated" 1M records. And apparently the CRDB cluster has decided, near or after the end of loading, that some re-balancing was called for and has (started?) to re-distribute the data over the 5 available nodes.

(Edit: see below at PostScriptum: CRDB was probably still working in the background, and about 1hr later the distribution of ranges was different again..)

Disk usage was now also a little more "spread out" over the nodes in the cluster:

We now see that each node has taken a share of the disk-consumption.

The select count (*) from t, now yields a million records, as expected. And bcse I could not resist, a sum(amount), which is bound to visit every record of the table, gave me the following explain-plan:

(The explain from crdb can generate a url that you can use to cp-paste into a browser - supercool feature)

We notice that for this sum-query all nodes participate in the aggregate, which supposedly makes it faster for large sets of data. Each node can contribute by sum-ming the data in the range (shard) for which it has a replica. This is what "sharded" data looks like, and how large sharded volumes should behave.

QED: We see a Distributed Database: Replicated and Sharded.

Lessons, Observations:

Yes, this is a Distributed Database.

We can see that the data is indeed spread over the node_ids that are listed as replicas in the "ranges". I also notice that CRDB does this all by itself, automated, and for a large part "in the background".

The sharding also feels safely "conservative": CRDB seems to only do sharding (range-splitting over nodes) when the data gets over the 512M threshold. This means that "small" tables remain compact with data located in 1 shard. But the data is always replicated 3x, as every table, even "a single range table", is always kept in 3 replicas right from the start.

Also, if I know (or can find, via the range-info) which table has shards on a given node, I can quickly guesstimate the impact of a problem with that node. I hope to try a little more of that, killing nodes, in future tests.

There are a few other interesting or useful items I found when cobbling together this test:

PSQL works, more or less.

Wire-compatibility helps. The PSQL front-end works "mostly". The \d <table> command still generates an error, but the backslash-copy-utility worked, and was quite useful for my testing. The pg_dump tool doesnt seem to work (yet). maybe something to ask CRDB about.

SQL is Everything and Everything is SQL:

SQL is the most natural interface for anything "Data". And an RDBMS has that SQL-interface (the SQL-API) always available anyway: Please Use It.

An SQL interface to range-information is Good, please keep it. Some of the internal tables/views generate(d) a warning when I queried them:

It seems some of this behaviour is being deprecated or is "under-maintanance". I would like to express my own perference: Please Keep the SQL-interface.

I really appreciate it when internal data can be "queried". Even if the views are not alway consistent because we query straight from memory. SQL allows me to find+combine information "at will" and I much prefer it over pre-baked show commands. The output from a SHOW RANGES command is useful, but it doesnt allow me to join the results immediately to other information. And I like the ability to "find" ranges of a table from an SQL query as much as from the more "proprietary" SHOW-command (personal pet peeve: Rule 4 from Codd... link)

PostScriptum: Re-distribution wasnt finished... ?

After writing up the text above, I found that the re-distribution had yielded less ranges, and possibly a more even distribution:

It seems that CRDB was still working in the background. And the final result looks better than the intermediate I screenshotted above.

-------- End of Blogpost -- some notes and questions below --------

Q1: What if I _know_ a table will be multi-GB in size, and want to pre-split it into several ranges before starting to load or use it ? (My guess there is a facility for this, I should probably RTFM on that...).

Edit: Yes, there are table options, notably "split at" (link).

Q2: How to link ranges to tables without the ranges-view? I used the view crdb_internal.ranges, but it generates warnings. I can disable the warning with sql.show_ranges_deprecated_behavior.enabled=false. But if I use that option, I found the column that allows me to link tables to ranges, the table_id, seems to disappear from the ranges-view. How can I still use SQL to join table to range under the new behaviour ?

Edit, Answer from Shaun@CR: You can wrap a SELECT around that: SELECT * FROM [SHOW RANGES FROM TABLE <table> WITH DETAILS];

Thx Shaun!

Q3: Is any compression effective immediately from the start of the load? The sizes listed for the ranges dont seem to correspond to the disk-usage consumed. In fact, the range-size seems larger than the consumed amount of disk. How does this work out when estimating required disk-sizes for large datasets ?

Edit: Yes, the data is compressed on first storage. And yes, this makes guesstimating actual disk-requirements more tricky. But (my opinion) compression is probably a good thing here. And it is configurable (add more details later...)

Comments

Post a Comment