Yugabyte Distributed database (in docker on macbook...)

TL;DR: I managed to create a 6 node cluster and run a distributed database on it. Got me a nice Playground, and some interesting things to investigate.

Background: Serverless, but I want multiple nodes

(mostly kidding: because there is always a server running somewhere)

But, like some of my friends say: Serverless is The Future.

As a database-person; I've now started to explore a distributed database. And bcse I already knew approx how to use docker-containers as "little severs", that was a logical way to start.

I also had already experimented with yugabyte both in docker (1 node, 1 container, following these examples) and on the free cloud-offering (cloud.yugabyte.com). But those were all still "single node". I could do postgres-commands (nice, all of my scripts + demos worked...), but RF=1 is not really what Yugabyte is designed for. I needed more nodes...

Creating and Running multiple nodes.

Luckily, there was this example by Franck:

https://dev.to/yugabyte/yugabytedb-is-distributed-sql-resilient-and-consistent-4llf

I took the copy-paste code and did a little editing... to generate this:

#!/bin/ksh

#

# yb_multi.sh: try creating a multi node yb-cluster in docker

#

docker network create yb_net

# start 1st master, call it node1, network address: node1.yb_net

docker run -d --network yb_net \

--hostname node1 --name node1 \

-p15433:15433 -p5433:5433 \

-p7001:7000 -p9001:9000 \

yugabytedb/yugabyte \

yugabyted start --background=false --ui=true

# found out the hard way that a small pause is beneficial

sleep 15

#now add nodes..

docker run -d --network yb_net \

--hostname node2 --name node2 \

-p7002:7000 -p9002:9000 \

yugabytedb/yugabyte \

yugabyted start --background=false --join node1.yb_net

sleep 15

docker run -d --network yb_net \

--hostname node3 --name node3 \

-p7003:7000 -p9003:9000 \

yugabytedb/yugabyte \

yugabyted start --background=false --join node1.yb_net

sleep 15

docker run -d --network yb_net \

--hostname node4 --name node4 \

-p7004:7000 -p9004:9000 \

yugabytedb/yugabyte \

yugabyted start --background=false --join node1.yb_net

sleep 15

docker run -d --network yb_net \

--hostname node5 --name node5 \

-p7005:7000 -p9005:9000 \

yugabytedb/yugabyte \

yugabyted start --background=false --join node1.yb_net

sleep 15

docker run -d --network yb_net \

--hostname node6 --name node6 \

-p7006:7000 -p9006:9000 \

yugabytedb/yugabyte \

yugabyted start --background=false --join node1.yb_net

# health checks:

docker exec -it node1 yugabyted status

docker exec -it node2 yugabyted status

docker exec -it node3 yugabyted status

docker exec -it node4 yugabyted status

docker exec -it node5 yugabyted status

docker exec -it node6 yugabyted status

echo .

echo Scroll back and check if it all workd...

echo .

echo Also verify:

echo - connecting cli : ysqlsh -h localhost -p 5433 -U yugabyte

echo - inspect dashboard : localhost:15433

echo - inspect node3: : localhost:7003 (and 9003, etc...)

echo .

echo Have Fun.

echo .

echo .

Small laptop, Ambitious Datacentre...

The docker-commands worked fine and I could play around with 6 nodes, mostly by stop/starting them from docker, and connecting to them over mapped ports. Notice the portmap-numberings in the 7000 and 9000 range.

The final script is made to automate (the refresh of) my setup. Before the total script, I started somewhat more careful, with just creating the first node:

Creating the first node went fine. The function yb_servers() shows this cluster now consists of one node, and from earlier use of the same container-image, I knew I could probably connect and create my first table immediately, and I did.

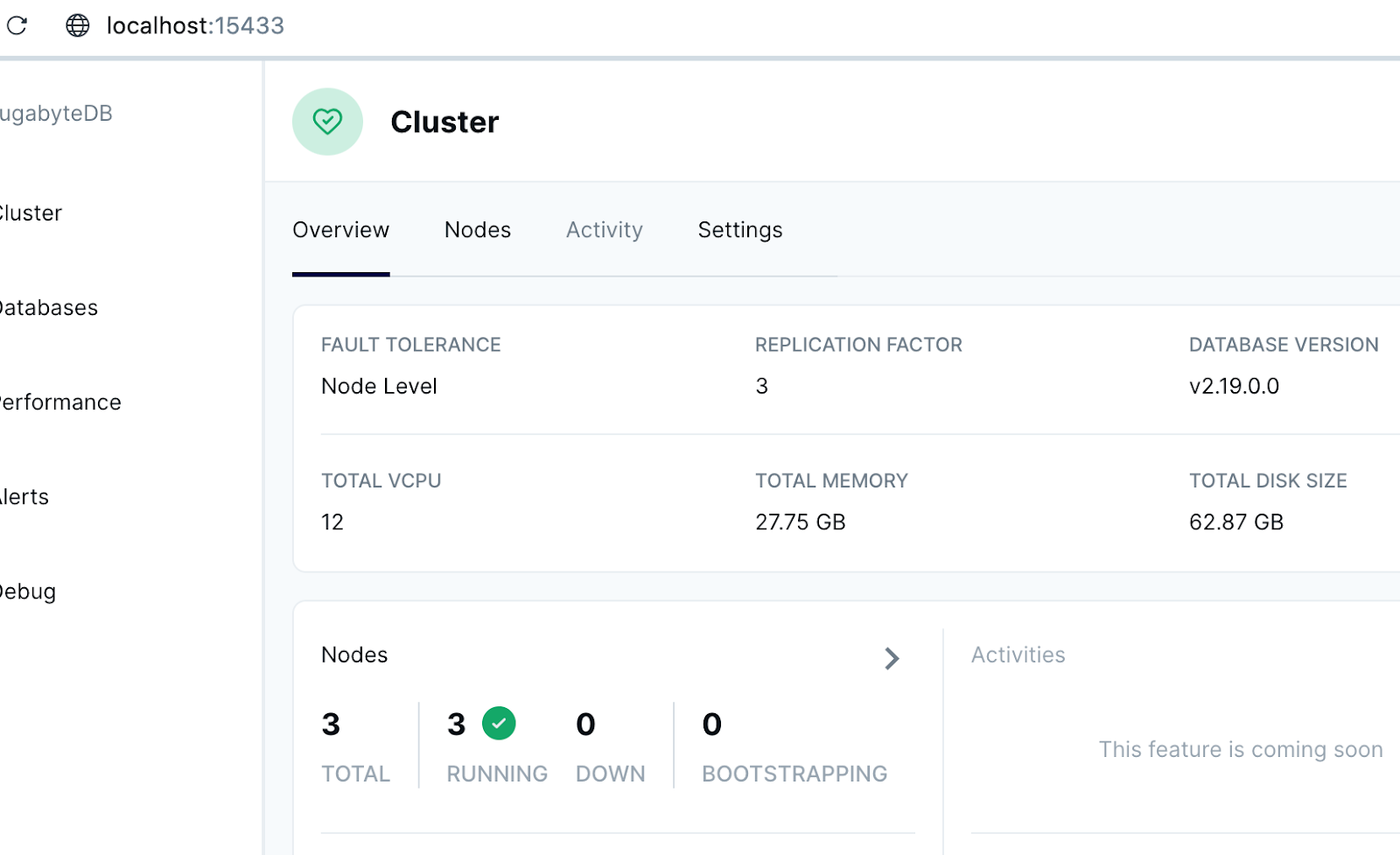

Also, the "console" at port 15433 was working straight away:

Console shows a cluster with one single node, and an RF=0. And it seems there are already 10 Tablets in there. Interesting. Those probably hold the pg_catalog.

But my mission is: multi-node, preferably with RF-3. Let's keep going, and create the 2nd node:

No problem. And the function yb_servers() shows two nodes.

Let's just also check the console:

Console also shows two nodes, and still RF=0.

Wait, wasnt there also a health check? Let me try the healthcheck from the yugabyted-status command on both nodes using docker exec on both containers:

Both nodes report Status: Running, and here it says: RF=1. We seem to have some replication. But the recommended config is at least 3 nodes. Let's continue...

Creating node3:

No problem creating node3. And again, I can logon, and can create a table. Also the three nodes show up in select from yb_servers().

Checking console after creating node3:

Bingo, We have RF=3.

Which now shows up in the health-check too. The doc-pages also state that the default RF=3, and with three nodes, that seems possible, and is now achieved. But let's go on..

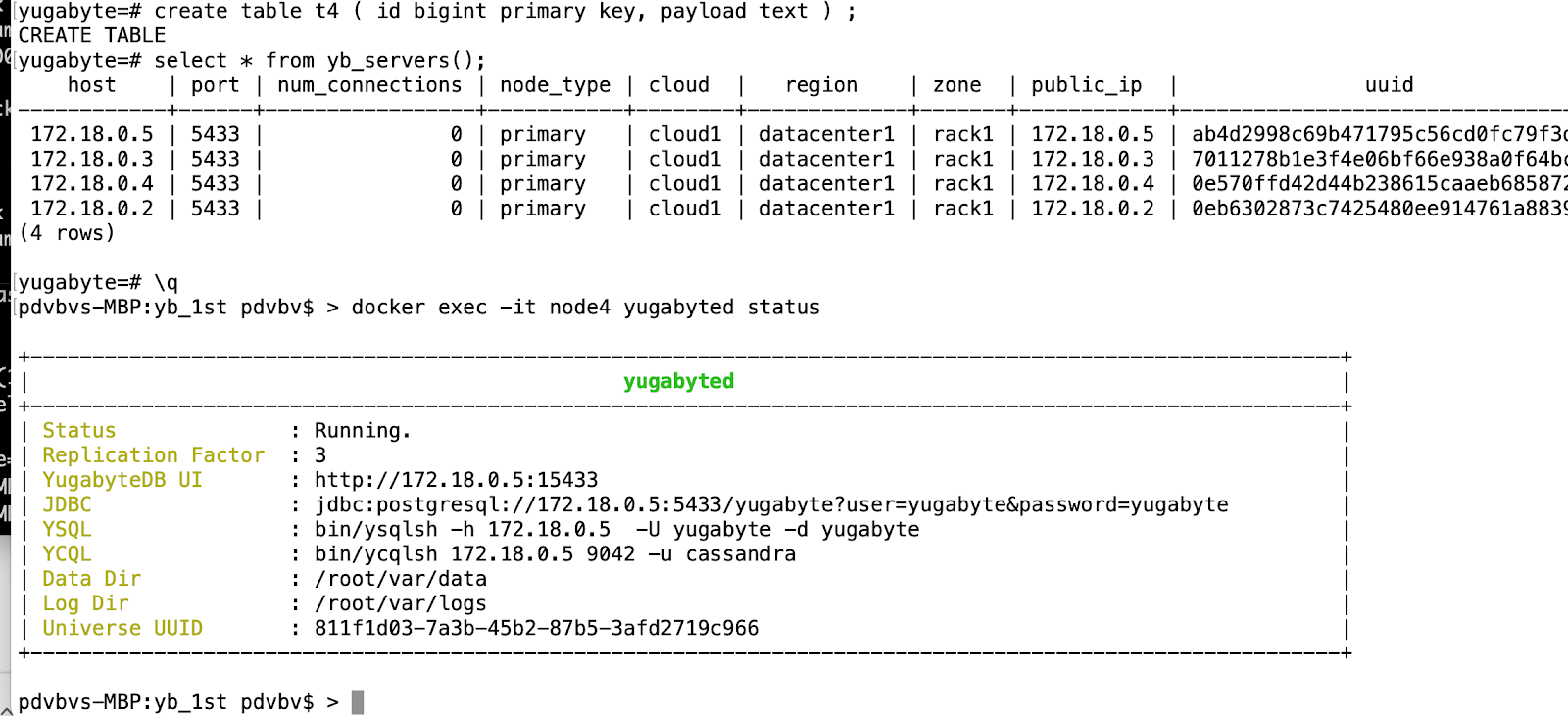

Creating node4:

No problems, I can still create tables, all four nodes show up in yb_servers(). And healthcheck looks Good:

Notice the RF is still 3. the Replication Factor didn't go up any further when we added the forth node.

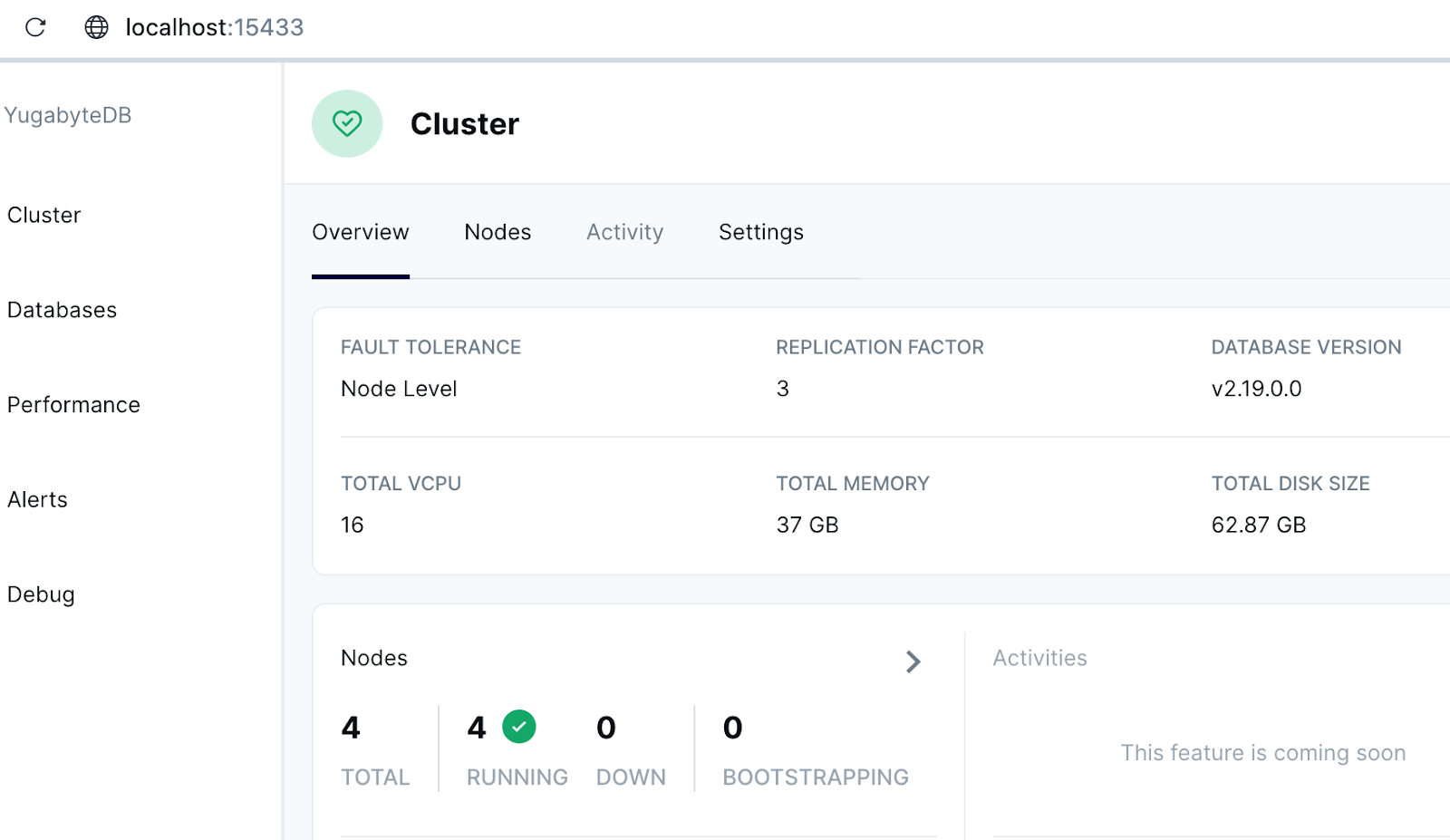

Checking console with 4 nodes:

Yep, Console agrees: There are 4 nodes and RF=3. Good to know.

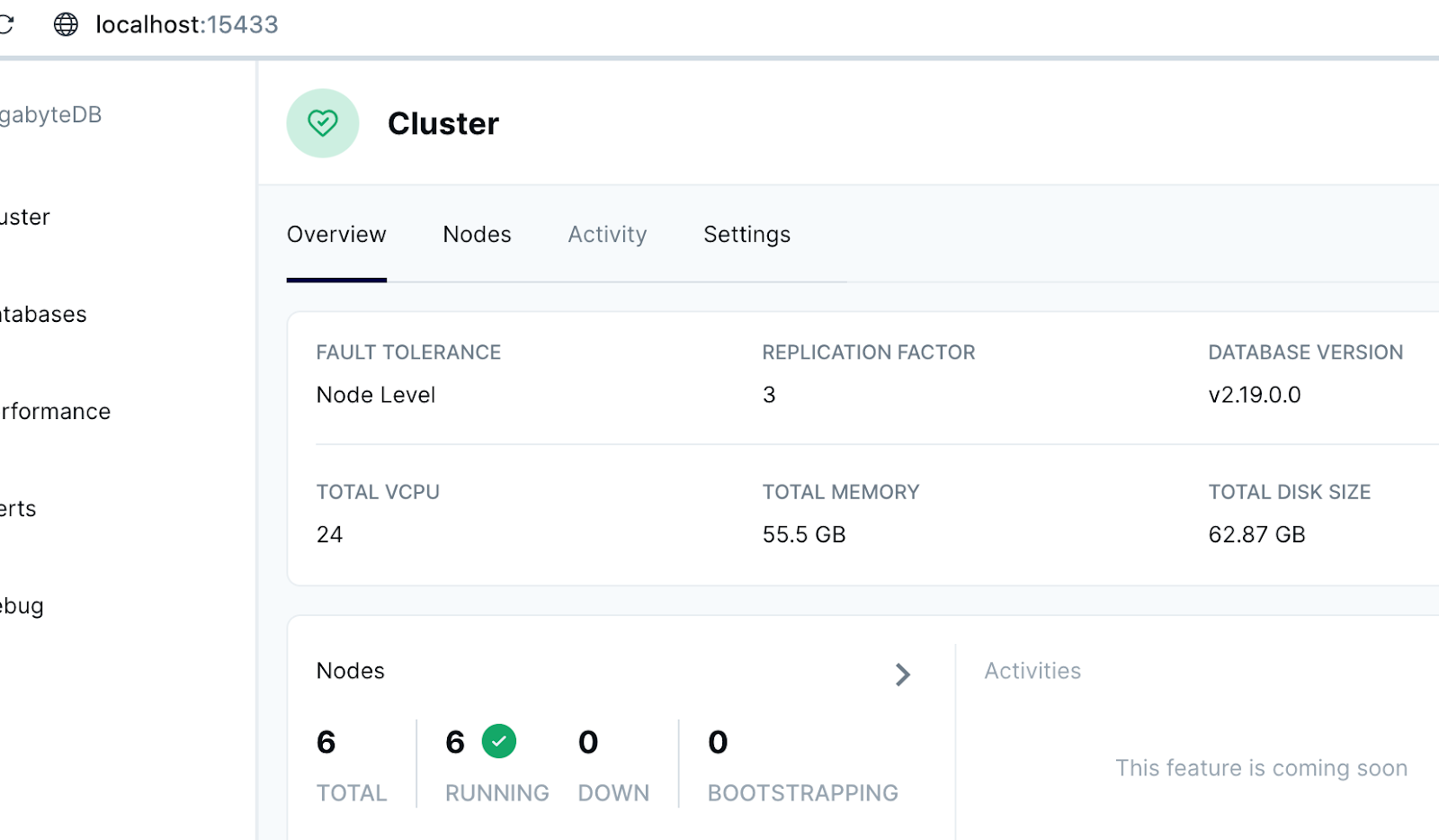

I quickly added node5 and node6, still creating tables and checking console:

Creating of last node went fine, and console confirms:

Console shows 6 (six) nodes, with an RF=3. I am slightly proud of my little datacentre-cluster...

Console looked good.

Health checks looked good.

Only my laptop seems to slow down a little. Not surprising for an MBP from 2013 (Quadcore i7, 16G RAM).

Both Console and healthcheck show: RF=3. And that is the documented default at the moment (Aug 2023, yb version 2.19).

Success.

Looks like I can create a cluster using docker network and docker-containers. I have a nice test-area to play around in. I'm curious to see how the division of data over tablets can affect storing and retrieving records, and now I have a nice Sandbox.

More...

PS: I'll point out two items, possibly for later playing.

In earlier experiments I have created a view to inspect "table-information" (link to previous blog), and when I "inspect" my newly created tables I notice two things:

Firstly, there seems to be no tablet for the Primary Keys. I think this is because in Yugabyte the PK, or rather the hash of the PK, determines in which tablet the records go, and there is not separate PK storage: The whole records is stored "with the PK". (verify, did I understand this correctly?)

Secondly, the number of tablets for each table seems to correspond with the number of nodes that were connected at the time of creation. I deduce that Yugabyte may try to "shard my table over all nodes" by default. Once I get to a significant nr of nodes, this may give me (by dflt behaviour?) a lot of tablets when adding a small table?

From earlier investigations, I also happen to know that "colocation", e.g. reducing the total number of (near-empty) tablets, is often Quite Beneficial when dealing with many-small-tables. Something to Think About.

To Be Continued...

(depending in time and interest...)

Comments

Post a Comment