Distributed data(base), some simple experiments.

TL;DR: in distributed databases (Example: Yugabyte), it helps to know how to define your tables. The default behaviour is Optimised (sharded, distributed) for Very Large Tables. But Small tables also need attention.

Too Much of a Good Thing...

Background

Distributed Databases are The Future.

That is why I began to experiment with Yugabyte. I managed to create a 6-node (yes, Six Nodes) cluster in no time. And because Yugabyte is fully Postgres Compatible my good-old pg-scripts work straight away.

From the install-story, I found that by default all my tables seem sharded over 6 tablets and that was something I wanted to investigate futher.

So, Let's Play....

The Demo

I needed some demo-tables first. With YB comes the "northwind" demo (link). This demo was promptly installed from the command-prompt from any of the nodes (regardless of which node: in my case they are all equal).

I shell-ed into the container, and typed

# Yugabyted demo connect

And there it was. I also use DBeaver (recommended) so I did some snooping around first. Then I decided to do a little test.

Selecting from a table

The smallest table I could find seemed the "region": two columns and 4 records. Ideal for very simple demos (the blog is called "simple pg dba" for a reason..).

This table could have its 4 records sharded over 6 tablets (and replicated with RF=3, so that could be 3x6 = 18 tablets...?).

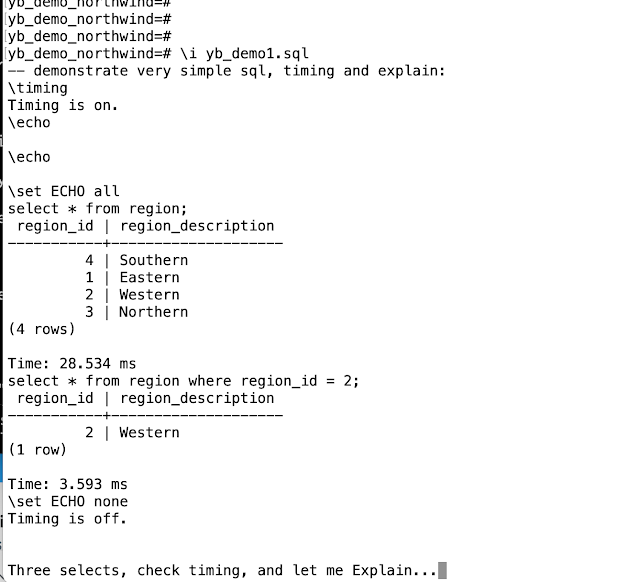

But I have to start simple! I started my research with two selects:

select * from region ;

select * from regio where region_id = 2 ;

Let see what happens:

Now, because this whole 6-node cluster is running on 1 laptop, the timing-info is not reliable, and can vary wildly. But it is notable that the select of the full-set takes 28ms and the selection of 1 records via the PK takes only 3ms.

On an "under resourced" system like this laptop it is much more important to measure "effort" rather than timing. If we can count the Actions (calls, storage-calls, buffer-gets, rpcs, whatever you call them), that is probably more relevant information. I'm going to use "explain" with the Analyze and the DIST(tributed) options to have a closer look:

Explain tells us that selecting all 4 records was done via a Seq(uential) Scan, this took 13ms, and 6 storage read requests. That could correspond to 6 tablets.

The Explain for the single-record, where region_id=2, tells us that it used and Index-Scan, that it took 1ms, and only 1 storage read request. That seems a lot more efficient. Although there may be a cache-effect in play as well.

It is what I expect from an index-lookup (unique index at that): to be Efficient.

But the sequential scan of the full table seems relatively in-efficient; 6x the calls ? Would that have something to do with the "six tablets" ?

Too Much of a Good Thing ?

Re-run with less tablets

To further test, I created a copy of the region table, using the split-option to order it to split into just 1 tablet (hah!).

Note (and don't worry): Resilience is Still Guaranteed! It took me a few minutes to realise as well: 1-tablet doesnt mean you create a single-point-of-faillure. The 1-tablet (master) is still replicated with RF=3 (e.g. it has 2 followers). And I actually messed with the cluster a little, even destroyed a few nodes, to prove that to myself. I've not lost data yet, but I keep trying. More on that maybe later.

First, here is what I did to create the similar single-tablet-table, and select the data into it. Then verify using the same two queries:

Result-data are the same, and timing of the selects is similar, timing on an overworked laptop is of course... meh.

But look at the explain of the first query:

Storage Read Requests: 1.

The explain of the sequential-scan that was used to pick up all four records now did only 1 storage call (to the single tablet?). The timing of both queries is also below 10ms, which seems much more efficient than the 28m on previous attempt. There is probably a cache-effect on my system that benefits the timing.

My first impression is : Less Tablets means less Storage calls on seq-scan. Hence for smaller tables this is probably more efficient.

Quick view into tables per table...

Just to verify the tablets per table: The new table Actually has only 1 tablet assigned to it (using the view ybx_tblnfo from previous blog...):

Notice how the newly copied table, region1, has only 1 tablet assigned to it, and that the tables are not colocated (different story).

Also notice the primary-keys are listed as "relations" (which is default postgres behaviour), but they don't have any tablets (storage) assigned.

Yugabyte effectively stores all records "behind the PK", so the PK doesn't need separate storage-entities (this doesn't mean the data is by default ordered-by-pk, on the contrary, because the distribution is by default done on hash.. another topic, sometime..)

There is Always More...

I did similar testing with "colocated". That is another Option to investigate for small-tables. But co-location is set per-database. Would like to discuss with the YB folks on that topic.

Also, how good is my amateur-clusters with 6 equal nodes. Should I investigate separate master/t-server notes ?

The "breaking of the cluster" is worth one or more separate stories, but I don't know enough about that yet to "tell relevant tales". And how relevant is testing in docker on a laptop... Maybe later.

One more hobbyhorse: Ordering. The PK-hash, and the non-consequence for any ordering of data. YB is similar to MS-SQL in that it uses PK to locate/order the data. Oracle-wise this concept would half-qualify as an Index-Organized-Table (but no Ordering involved bcse Hash!).

However, Let me just say : Select pk, col1, col2,... from table ;

...does Not, and Should Not, give you any Guaranteed Order-By. You want ordering??? Then you must specify order-by-clause. Otherwise no ordering. Long+different topic...

Lessons on Tablets, if any...

Tablets are Good. But small tables may not need Too Much of them.

It could be that having 6 (six) tablets is hurting us in some cases. Small tables (and that is the often the majority in legacy-systems) may need some protection against "over distributing". And given that minimum tablet size is often taken in MB or GB, having a multitude of shards (tablets) for small sets will probably waste storage (and Valuable Cache-space!)

As a newbie to Yugabyte, I have to be very careful, but I think I know a thing or two about sharding and distributing work and especially distributing Data from projects as far back as the early 00s. I especially know that "chattyness" is another nasty problem that did not go away with "faster hardware"... Hence, I am also Very Grateful to Yugabyte for allowing "Explain (Analyze, DIST)" on queries.

The Yugabyte explain allows me to see into the backend-calls, listed here as "storage read/write requests". In some cases, it may be a good idea to minimize these requests.

Did I find bad things: Not really.

Is it all fixable: Yes, Definitely, and I think I demonstrated that.

To Be Continued...

Yugabyte is Conceptually a Very Interesting database, possibly "The Future".

I hope I can investigate a bit more soon-ish. Stay Tuned.

Ps : DM me if you want the (still somewhat messy) sql-script of the demo.

Comments

Post a Comment